Go to our Rockset Group to evaluate earlier Workplace Hours or to see what’s arising.

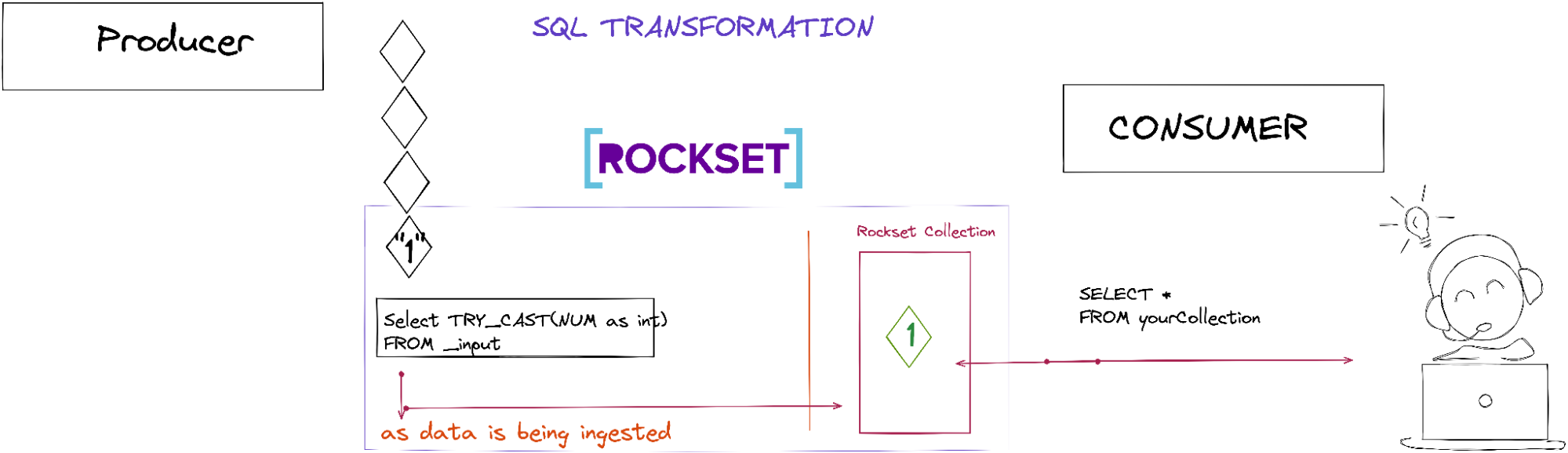

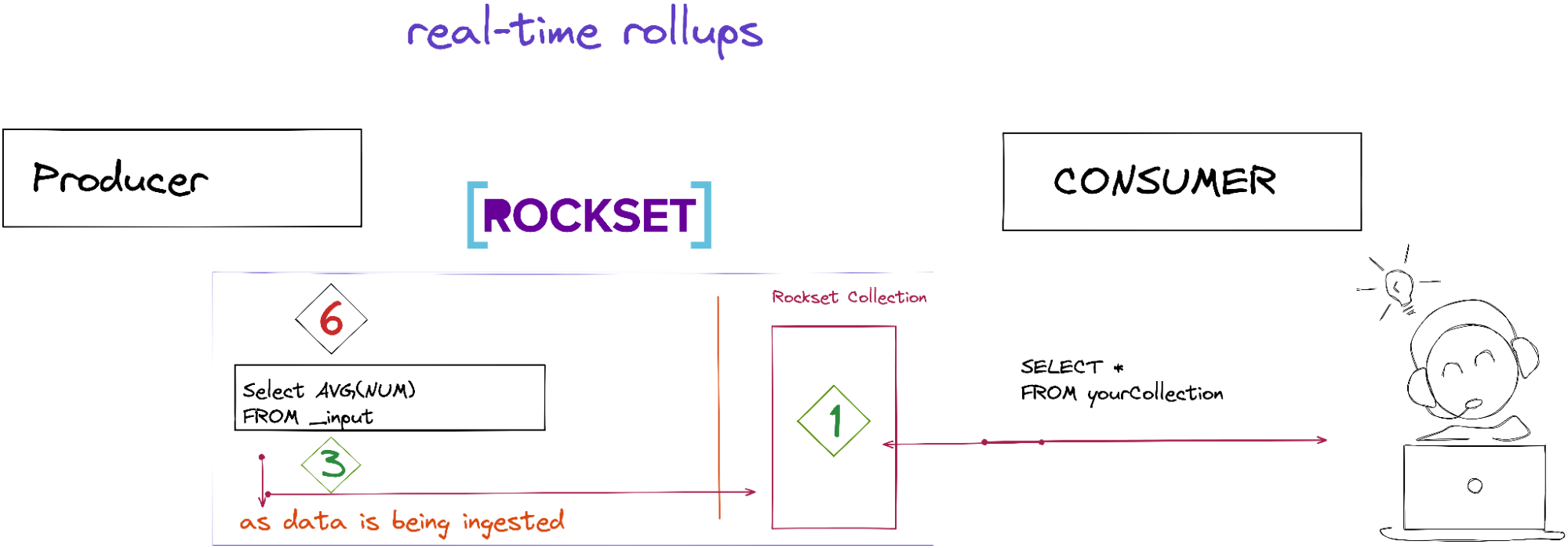

Throughout our Workplace Hours just a few weeks in the past, Tyler and I went over what are SQL transformations and real-time rollups, methods to apply them, and the way they have an effect on your question efficiency and index storage dimension. Under, we’ll cowl a number of the highlights.

SQL transformations and real-time rollups happen at ingestion time earlier than the Rockset assortment is populated with information. Right here’s the diagram I did throughout Rockset Workplace Hours.

Tyler demonstrated how question efficiency and storage are impacted while you use SQL transformations and real-time rollups with three totally different queries. Under, I’ll describe how we constructed the gathering and what we’re doing within the queries.

Preliminary Question With no SQL Transformations or Rollups Utilized

On this question, we’re constructing a time-series object that grabs probably the most lively tweeters inside the final day. There are not any SQL transformations or rollups, so the gathering accommodates simply the uncooked information.

-- Preliminary question in opposition to the plain assortment 1day: 12sec

with _data as (

SELECT

depend(*) tweets,

solid(DATE_TRUNC('HOUR',PARSE_TIMESTAMP('%a %h %d %H:%M:%S %z %Y', t.created_at)) as string) as event_date_hour,

t.person.id,

arbitrary(t.person.title) title

FROM

officehours."twitter-firehose" t trace(access_path=column_scan)

the place

t.person.id will not be null

and t.person.id will not be undefined

and PARSE_TIMESTAMP('%a %h %d %H:%M:%S %z %Y', t.created_at) > CURRENT_TIMESTAMP() - DAYS(1)

group by

t.person.id,

event_date_hour

order by

event_date_hour desc

),

_intermediate as (

choose

array_agg(event_date_hour) _keys,

array_agg(tweets) _values,

id,

arbitrary(title) title

from

_data

group by

_data.id

)

choose

object(_keys, _values) as timeseries,

id,

title

from

_intermediate

order by size(_keys) desc

restrict 100

Supply: GitHub gist

- On line 4 we’re counting the whole tweets

- On line 7 we’re pulling the ARBITRARY for

t.person.title— you may learn extra about ARBITRARY - On strains 15 and 16 we’re doing aggregations on

t.person.idandevent_date_hour - On line 5 we create the

event_date_hourby doing a CAST - On line 11-12 we filter person.id that’s not null or undefined

- On line 13 we get the most recent tweeters from the final day

- On strains 14-16 we do a GROUP BY with

t.person.idandevent_date_hour - On strains 20-37 we construct our time collection object

- On line 38 we return the highest 100 tweeters

This inefficient contrived question was run on dwell information with a medium VI and took about 7 seconds to execute.

Second Question With SQL Transformation Utilized Solely

Within the second question, we utilized SQL transformations once we created the gathering.

SELECT

*

, solid(DATE_TRUNC('HOUR', PARSE_TIMESTAMP('%a %h %d %H:%M:%S %z %Y', i.created_at)) as string) as event_date_hour

, PARSE_TIMESTAMP('%a %h %d %H:%M:%S %z %Y', i.created_at) as _event_time

, solid(i.id as string) as id

FROM

_input i

the place

i.person.id will not be null

and that i.person.id will not be undefined

Supply: GitHub gist

- On line 3, we create an

event_date_hour - On line 4, we create an

event_time - On line 5, we create an id as a string

- On strains 9 and 10, we choose

person.idthat’s not null or undefined

After we apply the transformations, our SQL question seems extra simplified than the preliminary question:

with _data as (

SELECT

depend(*) tweets,

event_date_hour,

t.person.id,

arbitrary(t.person.title) title

FROM

officehours."twitter-firehose_sqlTransformation" t trace(access_path=column_scan)

the place

_event_time > CURRENT_TIMESTAMP() - DAYS(1)

group by

t.person.id,

event_date_hour

order by

event_date_hour desc

),

_intermediate as (

choose

array_agg(event_date_hour) _keys,

array_agg(tweets) _values,

id,

arbitrary(title) title

from

_data

group by

_data.id

)

choose

object(_keys, _values) as timeseries,

id,

title

from

_intermediate

order by size(_keys) desc

restrict 100

Supply: GitHub gist

- On line 3, we’re counting the whole tweets

- On line 6 we’re pulling the ARBITRARY for

t.person.title - On line 10, the filter is now on the timestamp

- On strains 11-13 we nonetheless do a GROUP BY with

t.person.idandevent_date_hour - On strains 17-34 we nonetheless create our time-series object

Mainly, we excluded no matter we utilized throughout SQL transformations within the question itself. Once we run the question, the storage index dimension doesn’t change an excessive amount of, however the question efficiency goes from seven seconds to 3 seconds or so. By doing SQL transformations, we save on compute, and it exhibits — the question performs a lot sooner.

Third Question With SQL Transformation and Rollups Utilized

Within the third question we carried out SQL transformations and rollups once we created the gathering.

SELECT

depend(*) tweets,

solid(DATE_TRUNC('HOUR', PARSE_TIMESTAMP('%a %h %d %H:%M:%S %z %Y', i.created_at)) as string) as event_date_hour_str,

DATE_TRUNC('HOUR', PARSE_TIMESTAMP('%a %h %d %H:%M:%S %z %Y', i.created_at)) as event_date_hour,

solid(i.person.id as string) id,

arbitrary(i.person.title) title

FROM

_input i

the place

i.person.id will not be null

and that i.person.id will not be undefined

group by

i.person.id,

event_date_hour_str,

event_date_hour

Supply: GitHub gist

Along with what we did utilized earlier for the SQL transformations, we’re now making use of rollups as nicely.

- On line 2, we’re counting all of the tweets

- On line 6 we’re pulling the ARBITRARY

- On strains 12-15 we’re making use of the GROUP_BY

So now, our closing SQL question seems like this:

with _data as (

SELECT

tweets,

event_date_hour_str,

event_date_hour,

id,

title

FROM

officehours."twitter-firehose-rollup" t trace(access_path=column_scan)

the place

t.event_date_hour > CURRENT_TIMESTAMP() - DAYS(1)

order by

event_date_hour desc

),

_intermediate as (

choose

array_agg(event_date_hour_str) _keys,

array_agg(tweets) _values,

id,

arbitrary(title) title

from

_data

group by

_data.id

)

choose

object(_keys, _values) as timeseries,

id,

title

from

_intermediate

order by size(_keys) desc

Restrict 100

Supply: GitHub gist

Once we apply the SQL transformations with the rollups, our question goes from a womping seven seconds to 2 seconds. Additionally, our storage index dimension goes from 250 GiB to 11 GiB now!

Benefits/Issues for SQL Transformations and Actual-Time Rollups

SQL Transformations

Benefits:

- Improves question efficiency

- Can drop and masks fields at ingestion time

- Enhance compute value

Consideration:

- Have to know what your information seems like

Actual-Time Rollups

Benefits:

- Improves question efficiency and storage index dimension

- Knowledge is up to date inside the second

- Don’t want to fret about out-of-order arrivals

- Precisely-once semantics

- Enhance compute value

Issues:

- Knowledge decision — You’ll lose the uncooked information decision. When you want a duplicate of the uncooked information, create one other assortment with out rollups. If you wish to keep away from double storage, you may set a retention coverage while you create a group.

Rockset’s SQL-based transformations and rollups assist you to carry out information transformation that improves question efficiency and reduces storage index dimension. The ultimate information transformation is what’s continued within the Rockset assortment. It’s essential to notice that real-time rollups will constantly run on incoming information. When it comes to out-of-order arrivals, Rockset will course of them and replace the required information precisely as if these occasions truly arrived in-order and on-time. Lastly, Rockset ensures exactly-once semantics for streaming sources, like Kafka and Kinesis.

You possibly can catch the replay of Tyler’s Workplace Hours session on the Rockset Group. You probably have extra questions, please discover Tyler and Nadine within the Rockset Group.

Embedded content material: https://youtu.be/dUrHqoVKC34

Assets:

Rockset is the main real-time analytics platform constructed for the cloud, delivering quick analytics on real-time information with shocking effectivity. Study extra at rockset.com.