Introduction

Stateful processing in Apache Spark™ Structured Streaming has developed considerably to fulfill the rising calls for of complicated streaming functions. Initially, the applyInPandasWithState API allowed builders to carry out arbitrary stateful operations on streaming knowledge. Nevertheless, because the complexity and class of streaming functions elevated, the necessity for a extra versatile and feature-rich API turned obvious. To handle these wants, the Spark neighborhood launched the vastly improved transformWithStateInPandas API, obtainable in Apache Spark™ 4.0, which may now totally change the present applyInPandasWithState operator. transformWithStateInPandas supplies far higher performance reminiscent of versatile knowledge modeling and composite sorts for outlining state, timers, TTL on state, operator chaining, and schema evolution.

On this weblog, we are going to give attention to Python to check transformWithStateInPandas with the older applyInPandasWithState API and use coding examples to point out how transformWithStateInPandas can specific all the things applyInPandasWithState can and extra.

By the tip of this weblog, you’ll perceive the benefits of utilizing transformWithStateInPandas over applyInPandasWithState, how an applyInPandasWithState pipeline could be rewritten as a transformWithStateInPandas pipeline, and the way transformWithStateInPandas can simplify the event of stateful streaming functions in Apache Spark™.

Overview of applyInPandasWithState

applyInPandasWithState is a robust API in Apache Spark™ Structured Streaming that permits for arbitrary stateful operations on streaming knowledge. This API is especially helpful for functions that require customized state administration logic. applyInPandasWithState allows customers to govern streaming knowledge grouped by a key and apply stateful operations on every group.

A lot of the enterprise logic takes place within the func, which has the next kind signature.

For instance, the next operate does a operating depend of the variety of values for every key. It’s price noting that this operate breaks the one duty precept: it’s answerable for dealing with when new knowledge arrives, in addition to when the state has timed out.

A full instance implementation is as follows:

Overview of transformWithStateInPandas

transformWithStateInPandas is a brand new customized stateful processing operator launched in Apache Spark™ 4.0. In comparison with applyInPandasWithState, you’ll discover that its API is extra object-oriented, versatile, and feature-rich. Its operations are outlined utilizing an object that extends StatefulProcessor, versus a operate with a kind signature. transformWithStateInPandas guides you by providing you with a extra concrete definition of what must be applied, thereby making the code a lot simpler to motive about.

The category has 5 key strategies:

init: That is the setup methodology the place you initialize the variables and many others. on your transformation.handleInitialState: This optionally available step allows you to prepopulate your pipeline with preliminary state knowledge.handleInputRows: That is the core processing stage, the place you course of incoming rows of knowledge.handleExpiredTimers: This stage allows you to to handle timers which have expired. That is essential for stateful operations that want to trace time-based occasions.shut: This stage allows you to carry out any essential cleanup duties earlier than the transformation ends.

With this class, an equal fruit-counting operator is proven beneath.

And it may be applied in a streaming pipeline as follows:

Working with state

Quantity and sorts of state

applyInPandasWithState and transformWithStateInPandas differ by way of state dealing with capabilities and adaptability. applyInPandasWithState helps solely a single state variable, which is managed as a GroupState. This enables for easy state administration however limits the state to a single-valued knowledge construction and sort. In contrast, transformWithStateInPandas is extra versatile, permitting for a number of state variables of various sorts. Along with transformWithStateInPandas's ValueState kind (analogous to applyInPandasWithState’s GroupState), it helps ListState and MapState, providing higher flexibility and enabling extra complicated stateful operations. These extra state sorts in transformWithStateInPandas additionally carry efficiency advantages: ListState and MapState enable for partial updates with out requiring the whole state construction to be serialized and deserialized on each learn and write operation. This will considerably enhance effectivity, particularly with massive or complicated states.

applyInPandasWithState |

transformWithStateInPandas |

|

|---|---|---|

| Variety of state objects | 1 | many |

| Kinds of state objects | GroupState (Much like ValueState) |

ValueStateListStateMapState |

CRUD operations

For the sake of comparability, we are going to solely evaluate applyInPandasWithState’s GroupState to transformWithStateInPandas's ValueState, as ListState and MapState don’t have any equivalents. The most important distinction when working with state is that with applyInPandasWithState, the state is handed right into a operate; whereas with transformWithStateInPandas, every state variable must be declared on the category and instantiated in an init operate. This makes creating/organising the state extra verbose, but in addition extra configurable. The opposite CRUD operations when working with state stay largely unchanged.

GroupState (applyInPandasWithState) |

ValueState (transformWithStateInPandas) |

|

|---|---|---|

| create | Creating state is implied. State is handed into the operate by way of the state variable. |

self._state is an occasion variable on the category. It must be declared and instantiated. |

def func(

key: _,

pdf_iter: _,

state: GroupState

) -> Iterator[pandas.DataFrame]

|

class MySP(StatefulProcessor):

def init(self, deal with: StatefulProcessorHandle) -> None:

self._state = deal with.getValueState("state", schema)

|

|

| learn |

state.get # or increase PySparkValueError state.getOption # or return None |

self._state.get() # or return None |

| replace |

state.replace(v) |

self._state.replace(v) |

| delete |

state.take away() |

self._state.clear() |

| exists |

state.exists |

self._state.exists() |

Let’s dig slightly into a few of the options this new API makes attainable. It’s now attainable to

- Work with greater than a single state object, and

- Create state objects with a time to reside (TTL). That is particularly helpful to be used circumstances with regulatory necessities

applyInPandasWithState |

transformWithStateInPandas |

|

|---|---|---|

| Work with a number of state objects | Not Attainable |

class MySP(StatefulProcessor):

def init(self, deal with: StatefulProcessorHandle) -> None:

self._state1 = deal with.getValueState("state1", schema1)

self._state2 = deal with.getValueState("state2", schema2)

|

| Create state objects with a TTL | Not Attainable |

class MySP(StatefulProcessor):

def init(self, deal with: StatefulProcessorHandle) -> None:

self._state = deal with.getValueState(

state_name="state",

schema="c LONG",

ttl_duration_ms=30 * 60 * 1000 # 30 min

)

|

Studying Inside State

Debugging a stateful operation was difficult as a result of it was tough to examine a question’s inside state. Each applyInPandasWithState and transformWithStateInPandas make this straightforward by seamlessly integrating with the state knowledge supply reader. This highly effective function makes troubleshooting a lot less complicated by permitting customers to question particular state variables, together with a variety of different supported choices.

Under is an instance of how every state kind is displayed when queried. Notice that each column, aside from partition_id, is of kind STRUCT. For applyInPandasWithState the whole state is lumped collectively as a single row. So it’s as much as the person to tug the variables aside and explode to be able to get a pleasant breakdown. transformWithStateInPandas provides a nicer breakdown of every state variable, and every ingredient is already exploded into its personal row for simple knowledge exploration.

| Operator | State Class | Learn statestore |

|---|---|---|

applyInPandasWithState |

GroupState |

show(

spark.learn.format("statestore")

.load("/Volumes/foo/bar/baz")

)

|

transformWithStateInPandas |

ValueState |

show(

spark.learn.format("statestore")

.choice("stateVarName", "valueState")

.load("/Volumes/foo/bar/baz")

)

|

ListState |

show(

spark.learn.format("statestore")

.choice("stateVarName", "listState")

.load("/Volumes/foo/bar/baz")

)

|

|

MapState |

show(

spark.learn.format("statestore")

.choice("stateVarName", "mapState")

.load("/Volumes/foo/bar/baz")

)

|

Establishing the preliminary state

applyInPandasWithState doesn’t present a manner of seeding the pipeline with an preliminary state. This made pipeline migrations extraordinarily tough as a result of the brand new pipeline couldn’t be backfilled. However, transformWithStateInPandas has a way that makes this straightforward. The handleInitialState class operate lets customers customise the preliminary state setup and extra. For instance, the person can use handleInitialState to configure timers as properly.

applyInPandasWithState |

transformWithStateInPandas |

|

|---|---|---|

| Passing within the preliminary state | Not attainable |

.transformWithStateInPandas(

MySP(),

"fruit STRING, depend LONG",

"append",

"processingtime",

grouped_df

)

|

| Customizing preliminary state | Not attainable |

class MySP(StatefulProcessor):

def init(self, deal with: StatefulProcessorHandle) -> None:

self._state = deal with.getValueState("countState", "depend LONG")

self.deal with = deal with

def handleInitialState(

self,

key: Tuple[str],

initialState: pd.DataFrame,

timerValues: TimerValues

) -> None:

self._state.replace((initialState.at[0, "count"],))

self.deal with.registerTimer(

timerValues.getCurrentProcessingTimeInMs() + 10000

)

|

So should you’re curious about migrating your applyInPandasWithState pipeline to make use of transformWithStateInPandas, you possibly can simply accomplish that by utilizing the state reader emigrate the inner state of the previous pipeline into the brand new one.

Schema Evolution

Schema evolution is a vital facet of managing streaming knowledge pipelines, because it permits for the modification of knowledge constructions with out interrupting knowledge processing.

With applyInPandasWithState, as soon as a question is began, modifications to the state schema will not be permitted. applyInPandasWithState verifies schema compatibility by checking for equality between the saved schema and the lively schema. If a person tries to change the schema, an exception is thrown, ensuing within the question’s failure. Consequently, any modifications have to be managed manually by the person.

Clients normally resort to certainly one of two workarounds: both they begin the question from a brand new checkpoint listing and reprocess the state, or they wrap the state schema utilizing codecs like JSON or Avro and handle the schema explicitly. Neither of those approaches is especially favored in follow.

However, transformWithStateInPandas supplies extra strong assist for schema evolution. Customers merely must replace their pipelines, and so long as the schema change is suitable, Apache Spark™ will robotically detect and migrate the info to the brand new schema. Queries can proceed to run from the identical checkpoint listing, eliminating the necessity to rebuild the state and reprocess all the info from scratch. The API permits for outlining new state variables, eradicating previous ones, and updating current ones with solely a code change.

In abstract, transformWithStateInPandas's assist for schema evolution considerably simplifies the upkeep of long-running streaming pipelines.

| Schema change | applyInPandasWithState |

transformWithStateInPandas |

|---|---|---|

| Add columns (together with nested columns) | Not Supported | Supported |

| Take away columns (together with nested columns) | Not Supported | Supported |

| Reorder columns | Not Supported | Supported |

| Kind widening (eg. Int → Lengthy) | Not Supported | Supported |

Working with streaming knowledge

applyInPandasWithState has a single operate that’s triggered when both new knowledge arrives, or a timer fires. It’s the person’s duty to find out the rationale for the operate name. The way in which to find out that new streaming knowledge arrived is by checking that the state has not timed out. Due to this fact, it is a finest follow to incorporate a separate code department to deal with timeouts, or there’s a threat that your code won’t work accurately with timeouts.

In distinction, transformWithStateInPandas makes use of totally different features for various occasions:

handleInputRowsknown as when new streaming knowledge arrives, andhandleExpiredTimerknown as when a timer goes off.

In consequence, no extra checks are essential; the API manages this for you.

applyInPandasWithState |

transformWithStateInPandas |

|

|---|---|---|

| Work with new knowledge |

def func(key, rows, state):

if not state.hasTimedOut:

...

|

class MySP(StatefulProcessor):

def handleInputRows(self, key, rows, timerValues):

...

|

Working with timers

Timers vs. Timeouts

transformWithStateInPandas introduces the idea of timers, that are a lot simpler to configure and motive about than applyInPandasWithState’s timeouts.

Timeouts solely set off if no new knowledge arrives by a sure time. Moreover, every applyInPandasWithState key can solely have one timeout, and the timeout is robotically deleted each time the operate is executed.

In distinction, timers set off at a sure time with out exception. You may have a number of timers for every transformWithStateInPandas key, they usually solely robotically delete when the designated time is reached.

Timeouts (applyInPandasWithState) |

Timers (transformWithStateInPandas) |

|

|---|---|---|

| Quantity per key | 1 | Many |

| Set off occasion | If no new knowledge arrives by time x | At time x |

| Delete occasion | On each operate name | At time x |

These variations may appear refined, however they make working with time a lot less complicated. For instance, say you needed to set off an motion at 9 AM and once more at 5 PM. With applyInPandasWithState, you would wish to create the 9 AM timeout first, save the 5 PM one to state for later, and reset the timeout each time new knowledge arrives. With transformWithState, that is straightforward: register two timers, and it’s carried out.

Detecting {that a} timer went off

In applyInPandasWithState, state and timeouts are unified within the GroupState class, which means that the 2 will not be handled individually. To find out whether or not a operate invocation is due to a timeout expiring or new enter, the person must explicitly name the state.hasTimedOut methodology, and implement if/else logic accordingly.

With transformWithState, these gymnastics are now not essential. Timers are decoupled from the state and handled as distinct from one another. When a timer expires, the system triggers a separate methodology, handleExpiredTimer, devoted solely to dealing with timer occasions. This removes the necessity to test if state.hasTimedOut or not – the system does it for you.

applyInPandasWithState |

transformWithStateInPandas |

|

|---|---|---|

| Did a timer go off? |

def func(key, rows, state):

if state.hasTimedOut:

# sure

...

else:

# no

...

|

class MySP(StatefulProcessor):

def handleExpiredTimer(self, key, expiredTimerInfo, timerValues):

when = expiredTimerInfo.getExpiryTimeInMs()

...

|

CRUDing with Occasion Time vs. Processing Time

A peculiarity within the applyInPandasWithState API is the existence of distinct strategies for setting timeouts based mostly on processing time and occasion time. When utilizing GroupStateTimeout.ProcessingTimeTimeout, the person units a timeout with setTimeoutDuration. In distinction, for EventTimeTimeout, the person calls setTimeoutTimestamp as a substitute. When one methodology works, the opposite throws an error, and vice versa. Moreover, for each occasion time and processing time, the one solution to delete a timeout is to additionally delete its state.

In distinction, transformWithStateInPandas gives a extra simple method to timer operations. Its API is constant for each occasion time and processing time; and supplies strategies to create (registerTimer), learn (listTimers), and delete (deleteTimer) a timer. With transformWithStateInPandas, it’s attainable to create a number of timers for a similar key, which tremendously simplifies the code wanted to emit knowledge at numerous time limits.

applyInPandasWithState |

transformWithStateInPandas |

|

|---|---|---|

| Create one |

state.setTimeoutTimestamp(tsMilli) |

self.deal with.registerTimer(tsMilli) |

| Create many | Not attainable |

self.deal with.registerTimer(tsMilli_1) self.deal with.registerTimer(tsMilli_2) |

| learn |

state.oldTimeoutTimestamp |

self.deal with.listTimers() |

| replace |

state.setTimeoutTimestamp(tsMilli) # for EventTime state.setTimeoutDuration(durationMilli) # for ProcessingTime |

self.deal with.deleteTimer(oldTsMilli) self.deal with.registerTimer(newTsMilli) |

| delete |

state.take away() # however this deletes the timeout and the state |

self.deal with.deleteTimer(oldTsMilli) |

Working with A number of Stateful Operators

Chaining stateful operators in a single pipeline has historically posed challenges. The applyInPandasWithState operator doesn’t enable customers to specify which output column is related to the watermark. In consequence, stateful operators can’t be positioned after an applyInPandasWithState operator. Consequently, customers have needed to break up their stateful computations throughout a number of pipelines, requiring Kafka or different storage layers as intermediaries. This will increase each price and latency.

In distinction, transformWithStateInPandas can safely be chained with different stateful operators. Customers merely must specify the occasion time column when including it to the pipeline, as illustrated beneath:

This method lets the watermark data move via to downstream operators, enabling late report filtering and state eviction with out having to arrange a brand new pipeline and intermediate storage.

Conclusion

The brand new transformWithStateInPandas operator in Apache Spark™ Structured Streaming gives important benefits over the older applyInPandasWithState operator. It supplies higher flexibility, enhanced state administration capabilities, and a extra user-friendly API. With options reminiscent of a number of state objects, state inspection, and customizable timers, transformWithStateInPandas simplifies the event of complicated stateful streaming functions.

Whereas applyInPandasWithState should be acquainted to skilled customers, transformWithState's improved performance and flexibility make it the higher alternative for contemporary streaming workloads. By adopting transformWithStateInPandas, builders can create extra environment friendly and maintainable streaming pipelines. Attempt it out for your self in Apache Spark™ 4.0, and Databricks Runtime 16.2 and above.

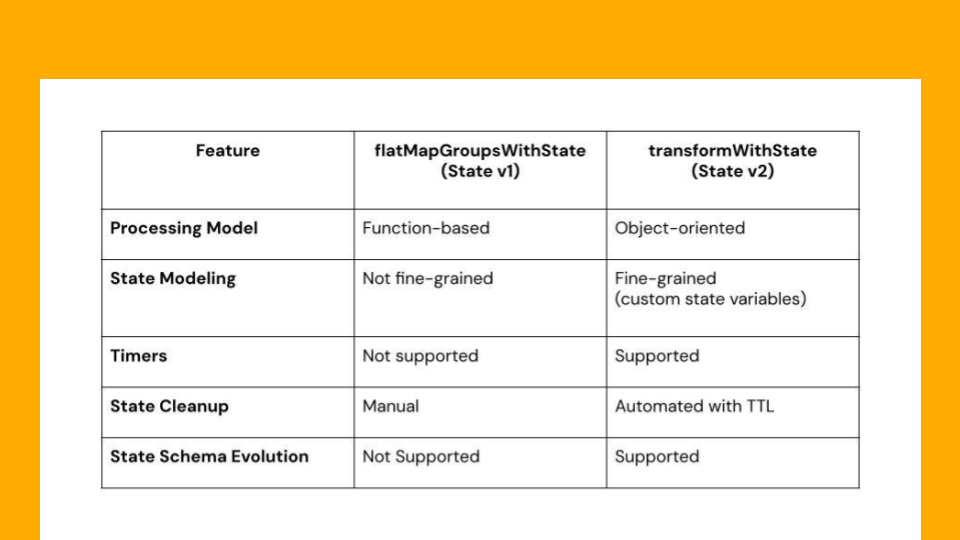

| Function | applyInPandasWithState (State v1) | transformWithStateInPandas (State v2) |

|---|---|---|

| Supported Languages | Scala, Java, and Python | Scala, Java, and Python |

| Processing Mannequin | Perform-based | Object-oriented |

| Enter Processing | Processes enter rows per grouping key | Processes enter rows per grouping key |

| Output Processing | Can generate output optionally | Can generate output optionally |

| Supported Time Modes | Processing Time & Occasion Time | Processing Time & Occasion Time |

| Nice-Grained State Modeling | Not supported (solely single state object is handed) | Supported (customers can create any state variables as wanted) |

| Composite Sorts | Not supported | Supported (presently helps Worth, Checklist and Map sorts) |

| Timers | Not supported | Supported |

| State Cleanup | Guide | Automated with assist for state TTL |

| State Initialization | Partial Assist (solely obtainable in Scala) | Supported in all languages |

| Chaining Operators in Occasion Time Mode | Not Supported | Supported |

| State Knowledge Supply Reader Assist | Supported | Supported |

| State Mannequin Evolution | Not Supported | Supported |

| State Schema Evolution | Not Supported | Supported |