Dimensional modeling is a time-tested strategy to constructing analytics-ready information warehouses. Whereas many organizations are shifting to fashionable platforms like Databricks, these foundational methods nonetheless apply.

In Half 1, we designed our dimensional schema. In Half 2, we constructed ETL pipelines for dimension tables. Now in Half 3, we implement the ETL logic for truth tables, emphasizing effectivity and integrity.

Truth tables and delta extracts

In the primary weblog, we outlined the actual fact desk, FactInternetSales, as proven beneath. In comparison with our dimension tables, the actual fact desk is comparatively slim by way of file size, with solely overseas key references to our dimension tables, our truth measures, our degenerate dimension fields and a single metadata subject current:

NOTE: Within the instance beneath, we’ve altered the CREATE TABLE assertion from our first put up to incorporate the overseas key definitions as an alternative of defining these in separate ALTER TABLE statements. We’ve additionally included a main key constraint on the degenerate dimension fields to be extra specific about their position extra specific on this truth desk.

The desk definition is pretty easy, but it surely’s value taking a second to debate the LastModifiedDateTime metadata subject. Whereas truth tables are comparatively slim by way of subject depend, they are usually very deep by way of row depend. Truth tables usually home hundreds of thousands, if not billions, of data, usually derived from high-volume operational actions. As an alternative of trying to reload the desk with a full extract on every ETL cycle, we’ll usually restrict our efforts to new data and people which were modified.

Relying on the supply system and its underlying infrastructure, there are lots of methods to determine which operational data should be extracted with a given ETL cycle. Change information seize (CDC) capabilities carried out on the operational aspect are essentially the most dependable mechanisms. However when these are unavailable, we regularly fall again to timestamps recorded with every transaction file as it’s created and modified. The strategy isn’t bulletproof for change detection, however as any skilled ETL developer will attest, it’s usually the most effective we’ve bought.

NOTE: The introduction of Lakeflow Join offers an attention-grabbing possibility for performing change information seize on relational databases. This functionality is in preview on the time of the writing of this weblog. Nonetheless, as the potential matures to broaden an increasing number of RDBMSs, we anticipate this to supply an efficient and environment friendly mechanism for incremental extracts.

In our truth desk, the LastModifiedDateTime subject captures such a timestamp worth recorded within the operational system. Earlier than extracting information from our operational system, we’ll overview the actual fact desk to determine the newest worth for this subject we’ve recorded. That worth would be the place to begin for our incremental (aka delta) extract.

The Truth ETL workflow

The high-level workflow for our truth ETL will proceed as follows:

- Retrieve the newest LastModifiedDateTime worth from our truth desk.

- Extract related transactional information from the supply system with timestamps on or after the newest LastModifiedDateTime worth.

- Carry out any further information cleaning steps required on the extracted information.

- Publish any late-arriving member values to the related dimensions.

- Lookup overseas key values from related dimensions.

- Publish information to the actual fact desk.

To make this workflow simpler to digest, we’ll describe its key phases within the following sections. In contrast to the put up on dimension ETL, we’ll implement our logic for this workflow utilizing a mix of SQL and Python based mostly on which language makes every step most easy to implement. Once more, one of many strengths of the Databricks Platform is its assist for a number of languages. As an alternative of presenting it as an all-or-nothing alternative made on the prime of an implementation, we’ll present how information engineers can rapidly pivot between the 2 inside a single implementation.

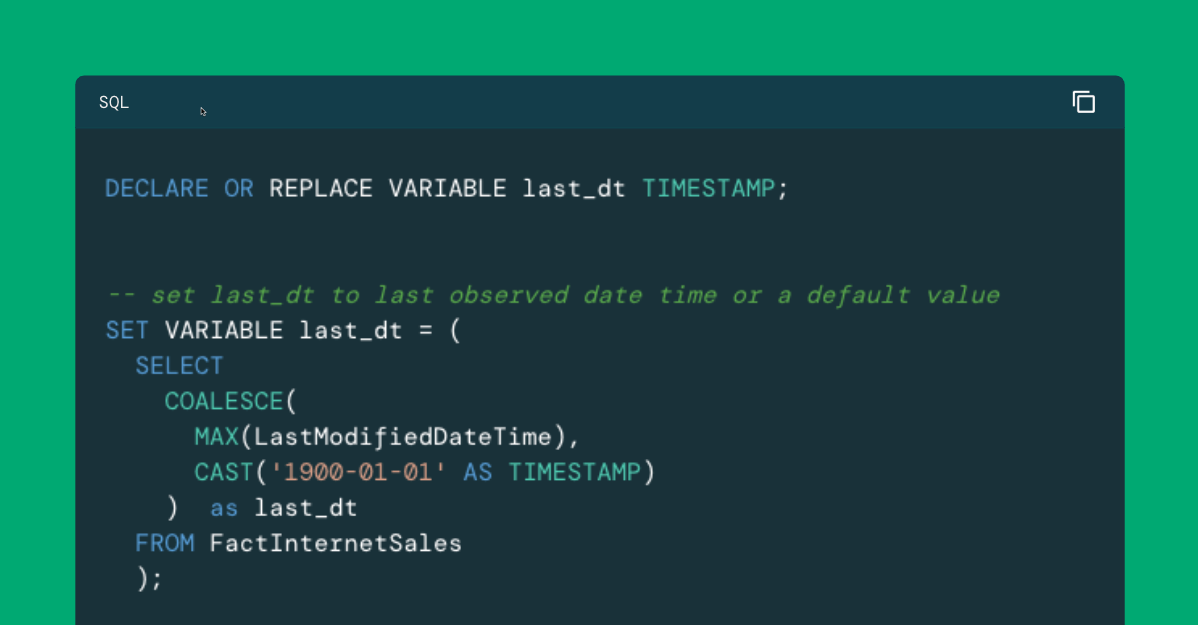

Steps 1-3: Delta extract part

Our workflow’s first two steps give attention to extracting new and newly up to date data from our operational system. In step one, we do a easy lookup of the newest recorded worth for LastModifiedDateTime. If the actual fact desk is empty, correctly upon initialization, we outline a default worth that’s far sufficient again in time that we imagine it is going to seize all of the related information within the supply system:

We are able to now extract the required information from our operational system utilizing that worth. Whereas this question contains fairly a little bit of element, focus your consideration on the WHERE clause, the place we make use of the final noticed timestamp worth from the earlier step to retrieve the person line gadgets which can be new or modified (or related to gross sales orders which can be new or modified):

As earlier than, the extracted information is persevered to a desk in our staging schema, solely accessible to our information engineers, earlier than continuing to subsequent steps within the workflow. If now we have any further information cleaning to carry out, we must always accomplish that now.

Step 4: Late arriving members part

The standard sequence in a knowledge warehouse ETL cycle is operating our dimension ETL workflows after which our truth workflows shortly after. By organizing our processes this fashion, we are able to higher guarantee all the data required to attach our truth data to dimension information will probably be in place. Nonetheless, there’s a slim window inside which new, dimension-oriented information arrives and is picked up by a fact-relevant transactional file. That window will increase ought to now we have a failure within the total ETL cycle that delays truth information extraction. And, after all, there can at all times be referential failures in supply programs that permit questionable information to look in a transactional file.

To insulate ourselves from this drawback, we’ll insert right into a given dimension desk any enterprise key values present in our staged truth information however not within the set of present (unexpired) data for that dimension. This strategy will create a file with a enterprise (pure) key and a surrogate key that our truth desk can reference. These data will probably be flagged as late arriving if the focused dimension is a Sort-2 SCD in order that we are able to replace appropriately on the subsequent ETL cycle.

To get us began, we’ll compile an inventory of key enterprise fields in our staging information. Right here, we’re exploiting strict naming conventions that permit us to determine these fields dynamically:

NOTE: We’re switching to Python for the next code examples. Databricks helps the usage of a number of languages, even inside the identical workflow. On this instance, Python offers us a bit extra flexibility whereas nonetheless aligning with SQL ideas, making this strategy accessible to extra conventional SQL builders.

Discover that now we have separated our date keys from the opposite enterprise keys. We’ll return to these in a bit, however for now, let’s give attention to the non-date (different) keys on this desk.

For every non-date enterprise key, we are able to use our subject and desk naming conventions to determine the dimension desk that ought to maintain that key after which carry out a left-semi be a part of (much like a NOT IN() comparability however supporting multi-column matching if wanted) to determine any values for that column within the staging desk however not within the dimension desk. Once we discover an unmatched worth, we merely insert it into the dimension desk with the suitable setting for the IsLateArriving subject:

This logic would work superb for our date dimension references if we wished to make sure our truth data linked to legitimate entries. Nonetheless, many downstream BI programs implement logic that requires the date dimension to accommodate a steady, uninterrupted sequence of dates between the earliest and newest values recorded. Ought to we encounter a date earlier than or after the vary of values within the desk, we want not simply to enter the lacking member however create the extra values required to protect an unbroken vary. For that motive, we want barely totally different logic for any late arrival dates:

When you’ve got not labored a lot with Databricks or Spark SQL, the question on the coronary heart of this final step is probably going overseas. The sequence() perform builds a sequence of values based mostly on a specified begin and cease. The result’s an array that we are able to then explode (utilizing the explode() perform) so that every factor within the array varieties a row in a end result set. From there, we merely evaluate the required vary to what’s within the dimension desk to determine which parts should be inserted. With that insertion, we guarantee now we have a surrogate key worth carried out on this dimension as a good key in order that our truth data may have one thing to reference.

Steps 5 – 6: Information publication part

Now that we could be assured that each one enterprise keys in our staging desk could be matched to data of their corresponding dimensions, we are able to proceed with the publication to the actual fact desk.

Step one on this course of is to search for the overseas key values for these enterprise keys. This may be carried out as a part of a single publication step, however the massive variety of joins within the question usually makes this strategy difficult to take care of. For that reason, we would take the much less environment friendly however easier-to-comprehend and modify the strategy of trying up overseas key values one enterprise key at a time and appending these values to our staging desk:

Once more, we’re exploiting naming conventions to make this logic extra easy to implement. As a result of our date dimension is a role-playing dimension and due to this fact follows a extra variable naming conference, we implement barely totally different logic for these enterprise keys.

At this level, our staging desk homes enterprise keys and surrogate key values together with our measures, degenerate dimension fields, and the LastModifiedDate worth extracted from our supply system. To make publication extra manageable, we must always align the obtainable fields with these supported by the actual fact desk. To do this, we have to drop the enterprise keys:

NOTE: The supply dataframe is outlined within the earlier code block.

With the fields aligned, the publication step is simple. We match our incoming data to these within the truth desk based mostly on the degenerate dimension fields, which function a novel identifier for our truth data, after which replace or insert values as wanted:

Subsequent steps

We hope this weblog sequence has been informative to these searching for to construct dimensional fashions on the Databricks Platform. We anticipate that many skilled with this information modeling strategy and the ETL workflows related to it is going to discover Databricks acquainted, accessible and able to supporting long-established patterns with minimal modifications in comparison with what might have been carried out on RDBMS platforms. The place modifications emerge, corresponding to the power to implement workflow logic utilizing a mix of Python and SQL, we hope that information engineers will discover this makes their work extra easy to implement and assist over time.

To be taught extra about Databricks SQL, go to our web site or learn the documentation. You may also try the product tour for Databricks SQL. Suppose you need to migrate your present warehouse to a high-performance, serverless information warehouse with an incredible consumer expertise and decrease whole price. In that case, Databricks SQL is the answer — attempt it without spending a dime.