OpenAI fashions have advanced drastically over the previous few years. The journey started with GPT-3.5 and has now reached GPT-5.1 and the newer o-series reasoning fashions. Whereas ChatGPT makes use of GPT-5.1 as its major mannequin, the API offers you entry to many extra choices which are designed for various sorts of duties. Some fashions are optimized for velocity and price, others are constructed for deep reasoning, and a few concentrate on photos or audio.

On this article, I’ll stroll you thru all the main fashions obtainable via the API. You’ll study what every mannequin is greatest fitted to, which sort of venture it matches, and work with it utilizing easy code examples. The goal is to offer you a transparent understanding of when to decide on a selected mannequin and use it successfully in an actual utility.

GPT-3.5 Turbo: The Bases of Trendy AI

The GPT-3.5 Turbo initiated the revolution of generative AI. The ChatGPT also can energy the unique and can be a steady and low-cost low-cost resolution to easy duties. The mannequin is narrowed right down to obeying instructions and conducting a dialog. It has the power to answer questions, summarise textual content and write easy code. Newer fashions are smarter, however GPT-3.5 Turbo can nonetheless be utilized to excessive quantity duties the place price is the principle consideration.

Key Options:

- Pace and Value: It is rather quick and really low-cost.

- Motion After Instruction: It’s also a dependable successor of straightforward prompts.

- Context: It justifies the 4K token window (roughly 3,000 phrases).

Palms-on Instance:

The next is a short Python script to make use of GPT-3.5 Turbo for textual content summarization.

import openai

from google.colab import userdata

# Set your API key

shopper = openai.OpenAI(api_key=userdata.get('OPENAI_KEY'))

messages = [

{"role": "system", "content": "You are a helpful summarization assistant."},

{"role": "user", "content": "Summarize this: OpenAI changed the tech world with GPT-3.5 in 2022."}

]

response = shopper.chat.completions.create(

mannequin="gpt-3.5-turbo",

messages=messages

)

print(response.decisions[0].message.content material)Output:

GPT-4 Household: Multimodal Powerhouses

The GPT-4 household was an unlimited breakthrough. Such collection are GPT-4, GPT-4 Turbo, and the very environment friendly GPT-4o. These fashions are multimodal, that’s that it is ready to comprehend each textual content and pictures. Their main energy lies in difficult pondering, authorized analysis, and artistic writing that’s refined.

GPT-4o Options:

- Multimodal Enter: It handles texts and pictures without delay.

- Pace: GPT-4o (o is Omni) is twice as quick as GPT-4.

- Value: It’s a lot inexpensive than the standard GPT-4 mannequin.

An openAI research revealed that GPT-4 achieved a simulated bar check within the high 10 % of people to take the check. This is a sign of its functionality to cope with refined logic.

Palms-on Instance (Advanced Logic):

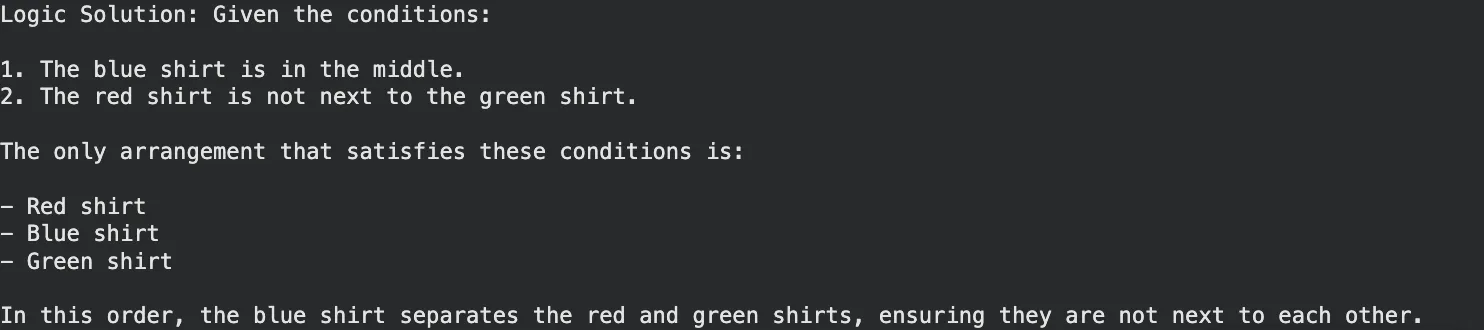

GPT-4o has the potential of fixing a logic puzzle which entails reasoning.

messages = [

{"role": "user", "content": "I have 3 shirts. One is red, one blue, one green. "

"The red is not next to the green. The blue is in the middle. "

"What is the order?"}

]

response = shopper.chat.completions.create(

mannequin="gpt-4o",

messages=messages

)

print("Logic Resolution:", response.decisions[0].message.content material)Output:

The o-Collection: Fashions That Suppose Earlier than They Converse

Late 2024 and early 2025 OpenAI introduced the o-series (o1, o1-mini and o3-mini). These are “reasoning fashions.” They don’t reply instantly however take time to assume and devise a technique in contrast to the conventional GPT fashions. This renders them math, science, and troublesome coding superior.

o1 and o3-mini Highlights:

- Chain of Thought: This mannequin checks its steps internally itself minimizing errors.

- Coding Prowess: o3-mini is designed to be quick and correct in codes.

- Effectivity: o3-mini is an extremely smart mannequin at a less expensive worth in comparison with the whole o1 mannequin.

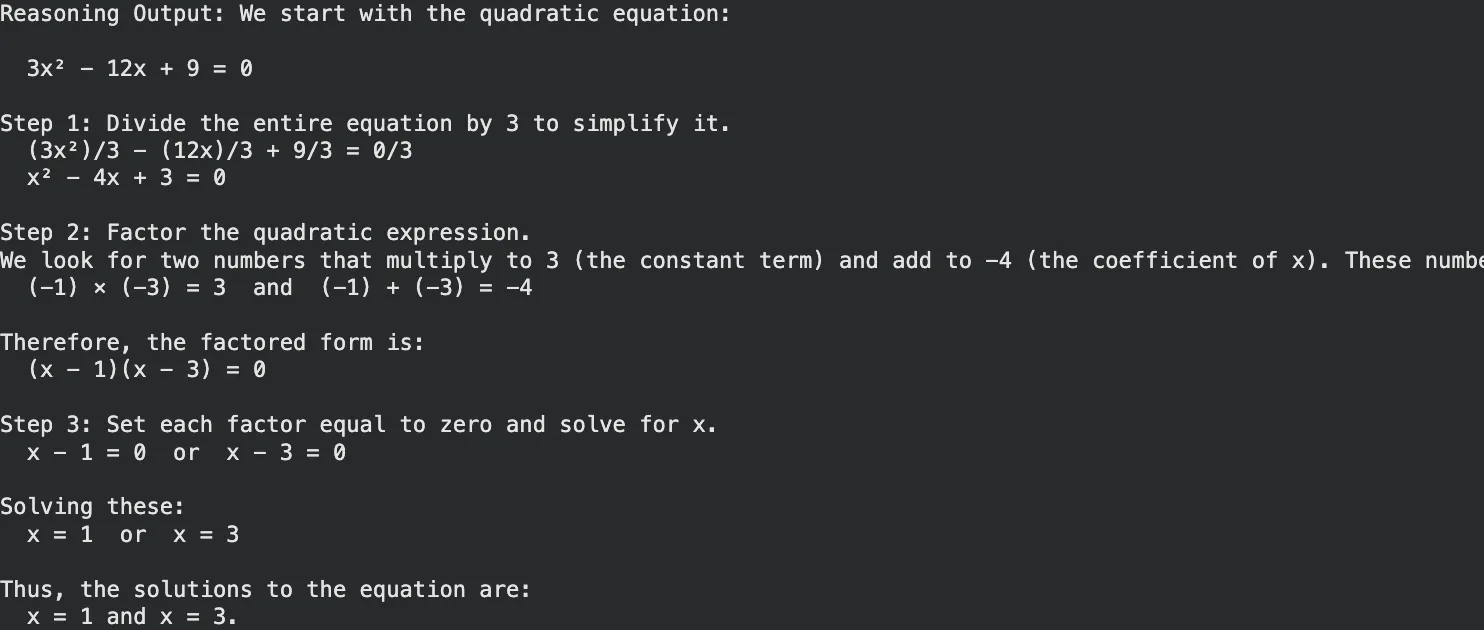

Palms-on Instance (Math Reasoning):

Use o3-mini for a math downside the place step-by-step verification is essential.

# Utilizing the o3-mini reasoning mannequin

response = shopper.chat.completions.create(

mannequin="o3-mini",

messages=[{"role": "user", "content": "Solve for x: 3x^2 - 12x + 9 = 0. Explain steps."}]

)

print("Reasoning Output:", response.decisions[0].message.content material)Output:

GPT-5 and GPT-5.1: The Subsequent Era

Each GPT-5 and its optimized model GPT-5.1, which was launched in mid-2025, mixed the tempo and logic. GPT-5 gives built-in pondering, by which the mannequin itself determines when to assume and when to reply in a short while. The model, GPT-5.1, is refined to have superior enterprise controls and fewer hallucinations.

What units them aside:

- Adaptive Considering: It takes easy queries right down to easy routes and easy reasoning as much as arduous reasoning routs.

- Enterprise Grade: GPT-5.1 has the choice of deep analysis with Professional options.

- The GPT Picture 1: That is an inbuilt menu that substitutes DALL-E 3 to offer clean picture creation in chat.

Palms-on Instance (Enterprise Technique):

GPT-5.1 is superb on the high stage technique which entails basic information and structured pondering.

# Instance utilizing GPT-5.1 for strategic planning

response = shopper.chat.completions.create(

mannequin="gpt-5.1",

messages=[{"role": "user", "content": "Draft a go-to-market strategy for a new AI coffee machine."}]

)

print("Technique Draft:", response.decisions[0].message.content material)Output:

DALL-E 3 and GPT Picture: Visible Creativity

Within the case of visible information, OpenAI gives DALL-E 3 and the newer GPT Picture fashions. These functions will rework textual prompts into lovely in-depth photos. Working with DALL-E 3 will allow you to attract photos, logos, and schemes by simply describing them.

Learn extra: Picture era utilizing GPT Picture API

Key Capabilities:

- Quick Motion: It strictly observes elaborate directions.

- Integration: It’s built-in into ChatGPT and the API.

Palms-on Instance (Picture Era):

This script generates a picture URL based mostly in your textual content immediate.

image_response = shopper.photos.generate(

mannequin="dall-e-3",

immediate="A futuristic metropolis with flying automobiles in a cyberpunk fashion",

n=1,

dimension="1024x1024"

)

print("Picture URL:", image_response.information[0].url)Output:

Whisper: Speech-to-Textual content Mastery

Whisper The speech recognition system is the state-of-the-art supplied by OpenAI. It has the power to transcribe audio of dozens of languages placing them into English. It’s immune to background noise and accents. The next snippet of Whisper API tutorial is a sign of how easy it’s to make use of.

Palms-on Instance (Transcription):

Be sure to are in a listing with an audio file (named as speech.mp3).

audio_file = open("speech.mp3", "rb")

transcript = shopper.audio.transcriptions.create(

mannequin="whisper-1",

file=audio_file

)

print("Transcription:", transcript.textual content)Output:

Embeddings and Moderation: The Utility Instruments

OpenAI has utility fashions that are vital to the builders.

- Embeddings (text-embedding-3-small/giant): These are used to encode textual content as numbers (vectors). This lets you create search engines like google and yahoo which might decipher which means versus key phrases.

- Moderation: This can be a free API that verifies textual content content material of hate speech, violence, or self-harm to make sure apps are safe.

Palms-on Instance (Semantic Search):

This discovers the very fact that there’s a similarity between a question and a product.

# Get embeddings

resp = shopper.embeddings.create(

enter=["smartphone", "banana"],

mannequin="text-embedding-3-small"

)

# In an actual app, you examine these vectors to search out the perfect match

print("Vector created with dimension:", len(resp.information[0].embedding))Output:

Effective-Tuning: Customizing Your AI

Effective-tuning allows coaching of a mannequin utilizing its personal information. GPT-4o-mini or GPT-3.5 may be refined to select up a selected tone, format or business jargon. That is mighty in case of enterprise functions, which require not more than basic response.

The way it works:

- Put together a JSON file with coaching examples.

- Add the file to OpenAI.

- Begin a fine-tuning job.

- Use your new customized mannequin ID within the API.

Conclusion

The OpenAI mannequin panorama presents a software for practically each digital activity. From the velocity of GPT-3.5 Turbo to the reasoning energy of o3-mini and GPT-5.1, builders have huge choices. You possibly can construct voice functions with Whisper, create visible belongings with DALL-E 3, or analyze information with the newest reasoning fashions.

The boundaries to entry stay low. You merely want an API key and an idea. We encourage you to check the scripts supplied on this information. Experiment with the completely different fashions to grasp their strengths. Discover the appropriate stability of price, velocity, and intelligence on your particular wants. The know-how exists to energy your subsequent utility. It’s now as much as you to use it.

Ceaselessly Requested Questions

A. GPT-4o is a general-purpose multimodal mannequin greatest for many duties. o3-mini is a reasoning mannequin optimized for complicated math, science, and coding issues.

A. No, DALL-E 3 is a paid mannequin priced per picture generated. Prices differ based mostly on decision and high quality settings.

A. Sure, the Whisper mannequin is open-source. You possibly can run it by yourself {hardware} with out paying API charges, supplied you have got a GPU.

A. GPT-5.1 helps a large context window (typically 128k tokens or extra), permitting it to course of complete books or lengthy codebases in a single go.

A. These fashions can be found to builders by way of the OpenAI API and to customers via ChatGPT Plus, Crew, or Enterprise subscriptions.

Login to proceed studying and revel in expert-curated content material.